Explore the list of questions in the menu to the left. Here you can troubleshoot solutions to commonly asked questions.

If you have general questions about using Gorilla try these How To Guides:

There are generally three reasons why you might not be able to edit a task / questionnaire / experiment:

Details of what to do:

If you've set up your display but when you preview your task, the display is being skipped over and isn't shown.

There are two common causes for this issue:

Here's what to do in each case:

1. You have created the display properly in the task builder, but you haven't entered the display name correctly into the spreadsheet.

Solution:

2. Your display name contains spaces, special characters, or punctuation.

Display names are case sensitive. Thus, the most common reason for a display not appearing is 'stray spaces'.

Its very easy to accidentally put an extra space into your Display names (or when entering these names into the spreadsheet). Most commonly this occurs at the beginning or end of the name when entering the display into the spreadsheet.

Solution:

3. "I've tried the above but my Display still isn't showing!"

Get in contact with us via our Support Contact Form. Provide us with a link to your task and we will happily take a look at your issue directly.

You need to make sure you've set up a way for the participant to advance to the next screen.

You could:

There are three common reasons why your task preview may be different from what you expected:

I get a blank screen on previewing my Task/ part-way through previewing my Task

I get a blank screen and the error message 'No source for the media could be found! Try refreshing the page. If the problem persists, contact the researcher for the experiment'.

When I preview my Task it doesn't look how I am expecting it to (but my displays are working as I expect them to).

The spreadsheet is the basis for the task in Gorilla, it drives the task telling Gorilla what should be displayed and in what order. This means that you have to upload a spreadsheet for a task to work on Gorilla, even for the simplest task.

The spreadsheet contains information about each trial of the task in a simple table format, one trial per one row.

In Gorilla Task Builder, you create displays (e.g. 'instructions', 'trial' and 'debrief' displays) that contain screens with specific objects.

You do not need to manually set up each of the trials on separate screens within the task editor - you only build each screen (or set of screens in a display - usually one individual trial) once and then simply list that display multiple times within the spreadsheet to tell Gorilla how many times the display should be shown during your task.

Building your task this way is an efficient process as it reduces the time it takes to modify your task.

There are three common reasons why you might not be able to upload a spreadsheet:

There are two different causes for spreadsheet columns appearing to be missing. Both of which typically occur on your Task's spreadsheet page after a new spreadsheet has been uploaded.

Note: This issue usually only arises when using multiple spreadsheets within your task.

To debug this issue first identify what 'type' of spreadsheet column has gone missing:

Here's what to do in each case:

1. Spreadsheet Metadata Column is missing:

Please refer to this dedicated troubleshooting page: Metadata column is missing.

2. Spreadsheet Source Column is missing:

You uploaded a new spreadsheet to your task and one or more of the columns associated with controlling your some aspect of your task does not appear.

Explanation: Gorilla helpfully removes columns which it detects as not in use: This includes empty columns not referenced within the task structure (i.e. columns not linked to a specific component via the binding feature).

This helps keep both the task spreadsheet and the subsequent metrics produced from the task as clean as possible.

Common Causes:

Solutions:

Case 1

Case 2

Case 3

Please refer to this dedicated troubleshooting page: Metadata column is missing for help with this issue.

There are two different causes for metadata columns appearing to be missing. Both of which typically occur on your task's spreadsheet page after a new spreadsheet has been uploaded.

Common Causes:

Explanation: Gorilla helpfully removes all columns which it detects as not in use. This includes empty columns and those not referenced within the task structure. Where an 'empty column' means there are no data entries in any row beneath the column heading.

This helps keep both the task spreadsheet and the subsequent metrics produced from the task as clean as possible.

Solutions:

Case 1

Case 2

Metadata columns with data inside will be visible so long as there is at least one spreadsheet - within all the spreadsheets uploaded to the task - which contains at least one row of data.

Usually this is not problematic but there are instances where empty metadata columns are required. You can read more about this and using metadata columns in the spreadsheet guide.

Recommended Solution:

Other solutions:

Spreadsheet columns are visible to all spreadsheets - regardless of whether they are in use by an individual particular spreadsheet or not. These metadata columns will now always appear in each of your spreadsheets without disappearing if they are empty. This is particularly useful when working with spreadsheet-manipulations and/or scripts.

Sometimes you may notice additional spreadsheet columns in your spreadsheet and/or metrics. Generally these are not problematic to the running of the task and can be either safely ignored or removed.

There are three common causes for extra spreadsheet columns appearing:

Common Causes:

Solutions:

Case 1

Regardless of whether a particular column is in use by an individual spreadsheet, non-empty columns which belong to any spreadsheet uploaded to a task will appear in all spreadsheets for that task.

For example: If spreadsheet-1, contains columns 'A' and 'B' and spreadsheet-2 contains columns 'C' and 'D'. So long as there is at least one row of data under each column heading: All columns, 'A', 'B', 'C' and 'D' will appear in both spreadsheet-1 and spreadsheet-2.

If you wish to remove a column:

The column should now be removed.

Case 2

If a spreadsheet column is referenced in a component in your task but is not included in your uploaded spreadsheet then the column name will appear as an empty column on your task's spreadsheet.

If you wish to remove this column:

Alternatively - if you intended to use this column but it has been misnamed: Create a new column in the binding menu of the component where it's been misnamed and rename it so that it exactly matches the spreadsheet column heading you wish to use instead.

Case 3

You can safely ignore this spreadsheet column and remove any references to this column from your metrics. It will not affect the running of your task.

If you wish to remove this column:

The column should now be removed.

As of August 2018, this problem should not occur because Gorilla is now able to interpret special characters in a non-universal format. However, if this does occur, follow the steps below.

This problem is typically encountered when uploading a spreadsheet for your Task in a csv format.

Explanation: This problem occurs because, in many programs like Excel, csv files are not saved using UTF-8 encoding by default. This means that these files don’t save special characters in a way that can be universally understood and recognised. When they are uploaded to Gorilla, because the special characters aren’t readable, they are instead replaced with the diamond with a question mark symbol which looks like this: �.

Solution: The way to resolve this is to save your csv file as a XLSX or ODS file, or as a 'CSV UTF-8 (Comma delimited)' compliant one.

Steps:

Older versions of Excel (2013 and earlier) don't directly offer a 'CSV UTF-8' file format. If you still wish to save this as a CSV, follow the steps below:

If you are using an older version of Excel and trying to resave a csv file to use UTF-8 encoding, the above steps do not work reliably! In this case:

This will then convert your csv file to use UTF-8 encoding.

In most file explorers, you won’t get an indication of whether a csv has been saved as UTF-8 or not.

Further, the default for most spreadsheet programs is to save the csv as a normal (non-UTF-8) CSV, even if this is a previously UTF-8 compliant CSV that you’ve downloaded from Gorilla.

Always make sure it is being saved in the UTF-8 compliant format if you are using special characters!

If you are previewing your task and notice that some of the stimuli you expected to see are not displaying, try the following steps:

If your video stops playing part way through, this is typically because your video file is corrupted in some way.

On Chrome, an error message will appear when this occurs, and video play error information will appear in your metrics. This means that if you launch an experiment containing a corrupted video, only participants on Chrome will show any error in the data.

In this case, you need to use a different video file.

You can read more about video errors in our media errors guide.

Is your audio set to play automatically instead of on a click or key press?

If so, this problem is likely caused by Autoplay issues. See our media autoplay guide for further information.

If your audio is set to manual play (activated in some way by a keypress or mouse click), please contact us via our support contact form and include information about the device and browser this problem occurred on.

For a full guide to media error messages check out our dedicated Help Page: Troubleshooting Media Error Messages.

There are three places where you may encounter a 'Media Error Message'.

Explanation:

Gorilla's Media Error messages have been added to help you create a task which will work consistently and robustly across all browsers and devices.

Media Error messages will be triggered if Gorilla notices that there is something wrong with your stimuli which could result in your task performing sub-optimally or, in the worst case, prevent your task from working at all.

Solution:

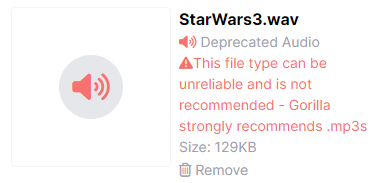

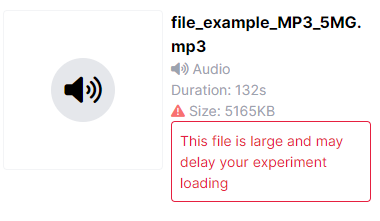

1) The most common Media Error message which occurs will be informing you that the stimuli you have uploaded is not in a supported format.

When you preview your Task you may find that your task and stimuli seem to work just fine. However, depending upon the stimuli type you may find that performance will differ between different browsers and different devices.

To avoid any risk and disappointment that your task will not display correctly for some participants we highly recommend you convert your stimuli to one of the supported web-compatible file types.

If you are not sure of which file types are supported you can find out here: Supported File Types. Alternatively, you can find out which file types are supported in a particular component by reviewing that component's dedicated section in the Task Builder 2 Components Guide or the Questionnaire Builder 2 Objects Guide.

2) The most common Media Error message which occurs when previewing a Task is that the media cannot be found.

If these steps do not fix your error please refer to our dedicated Media Error Messages help page.

3) As feedback from a participant who has taken part in your Live experiment.

Use our dedicated Troubleshooting Media Error Messages help page to identify the message you received and find the appropriate solution.

The majority of reports from participants about Media Error messages can be avoided by previewing your task and experiment fully before launching your experiment.

Once this is successful, a small pilot across all browsers you intend to use during your experiment is very highly recommended.

Piloting your full study across all browsers and devices you intend to use will usually pick up any additional media errors which may occur and allow you to prevent them from reoccurring when you come to launch your full experiment.

There are two common reasons you might not be able to preview an experiment:

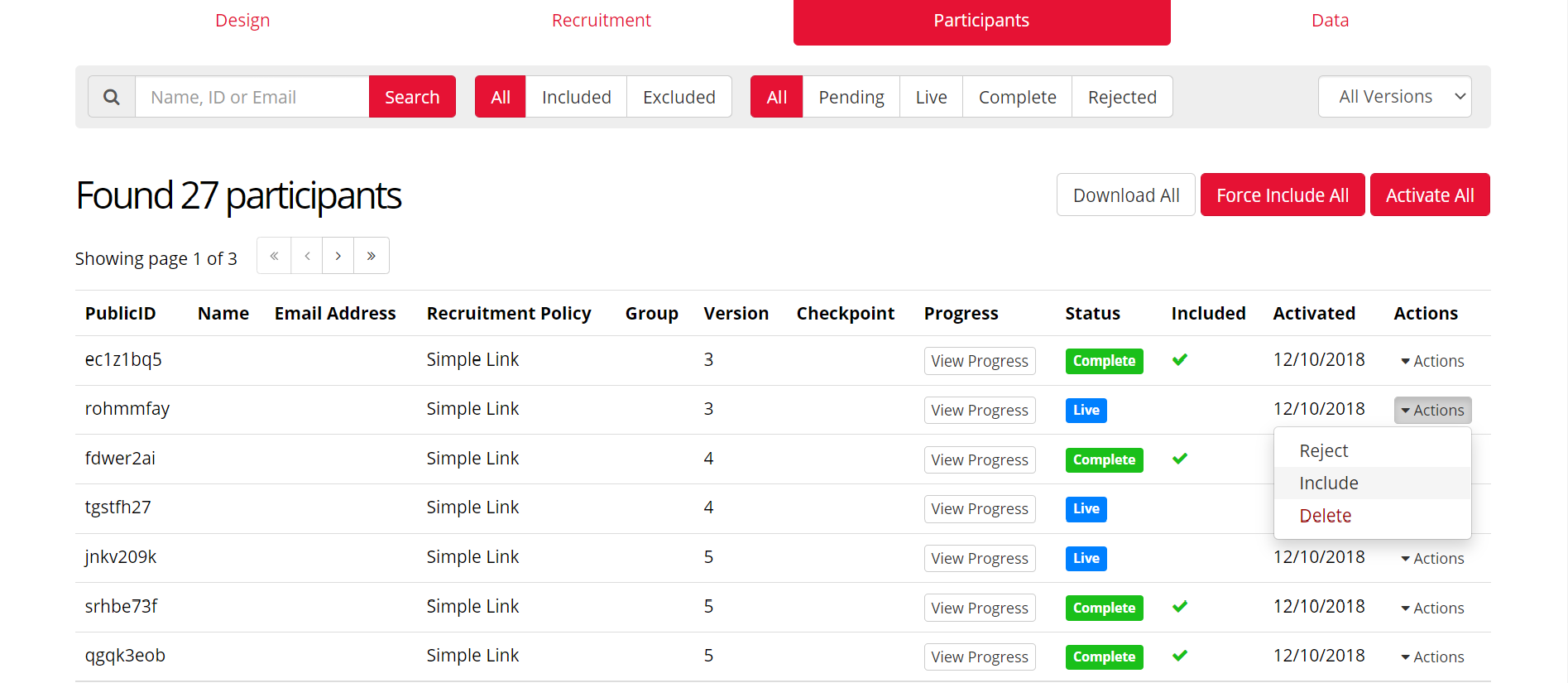

As soon as a participant starts an experiment (clicks on the recruitment link or is sent a recruitment email) they are marked as 'Live'. They will remain 'Live' until they reach a finish node, at which point they are marked as 'Completed'. When they reach a finish node, they are automatically 'included', their metrics become available in your data, and the participant token will be consumed. Alternatively, participants may be branched to a reject node. At which point the participant will be recorded as 'Rejected', and the reserved token will be returned to the Recruitment Target, allowing more participants to enter the experiment in the Rejected participant's place.

When a participant is Live, and has only recently joined the experiment, it's reasonable to assume that they are still taking part and just need a little more time to finish. If a participant has been Live for a very long time the most likely scenario is that, unfortunately, they have chosen to leave the experiment. This could have happened very early on: perhaps they chose not to consent. Or, very late in the experiment: they may have gotten interrupted by something else and had to leave the experiment.

To help you work out where a participant is in an experiment, its really helpful to include Checkpoint Nodes.

These are available in the experiment tree and we recommend putting them in at key points of your experiment. The most recent Checkpoint node that a participant passed through is recorded in the Participant tab of your experiment. This way you can easily see how far through your experiment a Live participant is and how likely it is that they are still working on your experiment, or have simply left. This also allows you to make an informed choice on whether or not to include a live participant's data (which consumes a token), or reject them and have the token returned to your account. You can then choose to include only those participants who have progressed far enough through your experiment that their data is worthwhile including.

Read more on participant status, how to include and reject them when needed from our Participant Status and Tokens guide!

If your participants are being turned away with the message that the Experiment is full, there are two possibilities:

If your Experiment is ‘Full’ this means that your Recruitment Target has been reached. In other words, that number of participants have entered your Experiment. This may also mean that some of your participants have not yet completed the Experiment and are still ‘Live’.

If your participants have been Live for a large amount of time, you may need to manually reject them in order to allow more participants to enter the experiment and complete your recruitment target.

For information on how to do this, see the Participant Status and Tokens guide.

This question is linked to our 'It doesn't work when I preview my experiment' troubleshooting example.

Worked example:

You have set up your Experiment Tree but then realised that you would like to make some changes to your Questionnaire/Task. You go to the Questionnaire/Task Builders, make changes, and commit new versions. Sometime later, perhaps when previewing your experiment before launching it, you notice your experiment is not running as you would expect it (e.g. the task works differently to what you have just committed in the Task Builder)!

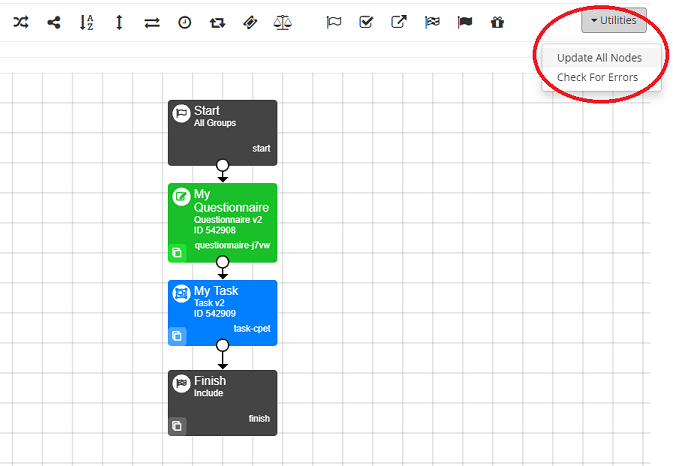

A quick check we recommend is seeing whether all nodes within your Experiment Tree are up-to-date, according to your latest commits within the Questionnaire/Task Builders. The Experiment Tree does not update nodes automatically - changes need to be applied and committed by you.

Therefore, make sure to keep your individual nodes within the Experiment Tree up-to-date! You could update all nodes quickly by clicking on Utilities -> Update All Nodes in the top right corner of your Experiment Tree Design Tab:

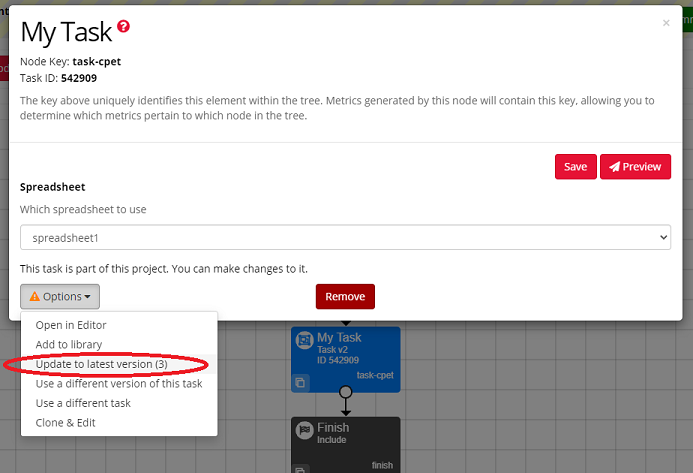

Or you could update separate nodes by clicking on each of them and choosing 'Update to latest version' from the Options drop-down menu:

This question is linked to Missing Data troubleshooting example.

Worked example:

Perhaps you are mid-through or completely finished (woohoo!) running your experiment and want to have a look at your collected data. You see in your Participants Tab in the Experiment Tree that 50 participants completed your study (learn more about participant progress here), but when you go to the Data Tab you realise there is only 40 people you can download your data for!

Don't worry - in most cases the solution is very simple: you need to look at the accurate version of your data!

Perhaps you are not seeing data from all participants because some other participants completed the older versions of your experiment. If you update nodes while running a live experiment, participants who entered your experiment before you updated your nodes will remain in the version they started in. Those who enter after you updated your nodes will automatically be assigned to the newest version of your experiment.

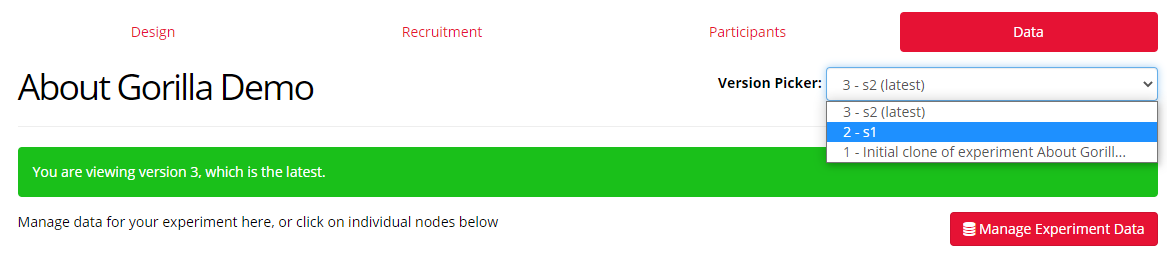

Make sure you select the correct version of your experiment's data by choosing experiment version from the Version Picker in the top right corner of your Data Tab! It might turn out that data from those 10 apparently missing participants is ready to download from an older version of your experiment! You can check which version of the experiment participants completed in the Participants Tab of the Experiment Builder.

We also recommend checking participant status - remember that your data is only ready to download for participants who have the Complete status, which indicates that they have gone through your whole experiment and reached the Finish Node. Participants who are currently Live (because they are still completing your experiment or have perhaps dropped out) will not automatically generate data ready for download yet. This could be done manually by you by including them. Including Live participants will allow you to look at the data they have generated so far at the cost of one token per participant.

Read more about participant status and how to include/reject participants in our Participant Status and Tokens guide!

For more troubleshooting on what seems to be 'missing' experiment data see the Missing Data troubleshooting page. Otherwise, you could contact our friendly support team if you are worried about your data and would like us to have a closer look!

Here are some reasons your branching may not be working as you expect:

Here's what to do in each case:

1. You are not saving the data correctly in your questionnaire or task.

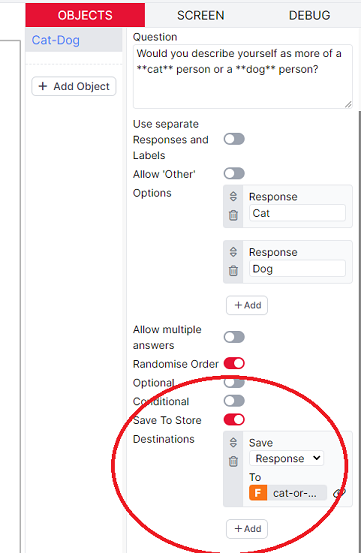

In Questionnaire Builder 2: In QB2, you must turn on the toggle for 'Save To Store' which will allow answers in your questionnaire to be used elsewhere in your questionnaire or experiment. You must then create a new Field name to bind (save) participants' answers into.

The Field name is what must go into the Property setting on the Branch node.

In Task Builder 2: In TB2, you need to make sure you're saving the data that you need to a Field in the Store. You can find out how to do that with our Saving Data to the Store support page.

The Field name is what must go into the Property setting on the Branch node.

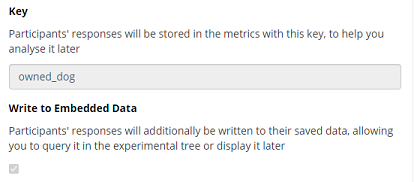

In Questionnaire Builder 1: To save an answer as embedded data in QB1, you must tick the 'Write to Embedded Data' option, located under the Key field in the questionnaire widget:

The Key name is what must go into the Property setting on the Branch node.

In Task Builder 1: In TB1, you need to save the information you need as Embedded Data. It is the name of the embedded data that must go into the Property setting on the Branch node.

2. The Property in your Branch Node does not match the name of the Field you've saved to the store (Questionnaire Builder 2 and Task Builder 2), the Key (Questionnaire Builder 1), or the name of your embedded data (Task Builder 1).

Find the Field that you've saved to the store (QB2 and TB2), the Key (QB1), or the name of your embedded data (TB1) in the task or questionnaire builder as appropriate (see the previous paragraphs for information on how to set these up in the first place). Copy the Field, Key, or embedded data name exactly and paste this into the Property field of the Branch node. This will ensure that your Branch Node can locate the embedded data.

If this still doesn't work, your field or embedded data may be accidentally set up as Spreadsheet or Manipulation, rather than Static. See the 'Store/embedded data don't display correctly' troubleshooting page for how to make sure you're using the correct name in each case.

3. You are using one Branch Node when your question allows multiple responses.

Each Branch Node can only handle one exclusive response (e.g. sending participants one way if they answered Yes, OR another way if they answered No, OR another way if they answered Maybe). If you are branching participants based on a component/widget (in QB2 and QB1 respectively) that accepts multiple responses (e.g., when allowing multiple answers on the Multiple Choice component to create a checklist), you will need to configure one Branch Node for each possible option they could select. You will then need to connect these Branch Nodes together to capture every combination of responses. For an example of how to set up Branch Nodes to capture multiple responses, see this Branching Tutorial.

If your question requires only one response, make sure to use a component that enforces this, such as Multiple Choice (with multiple responses disallowed to create radio buttons) or Dropdown. You will then only need to use one Branch Node.

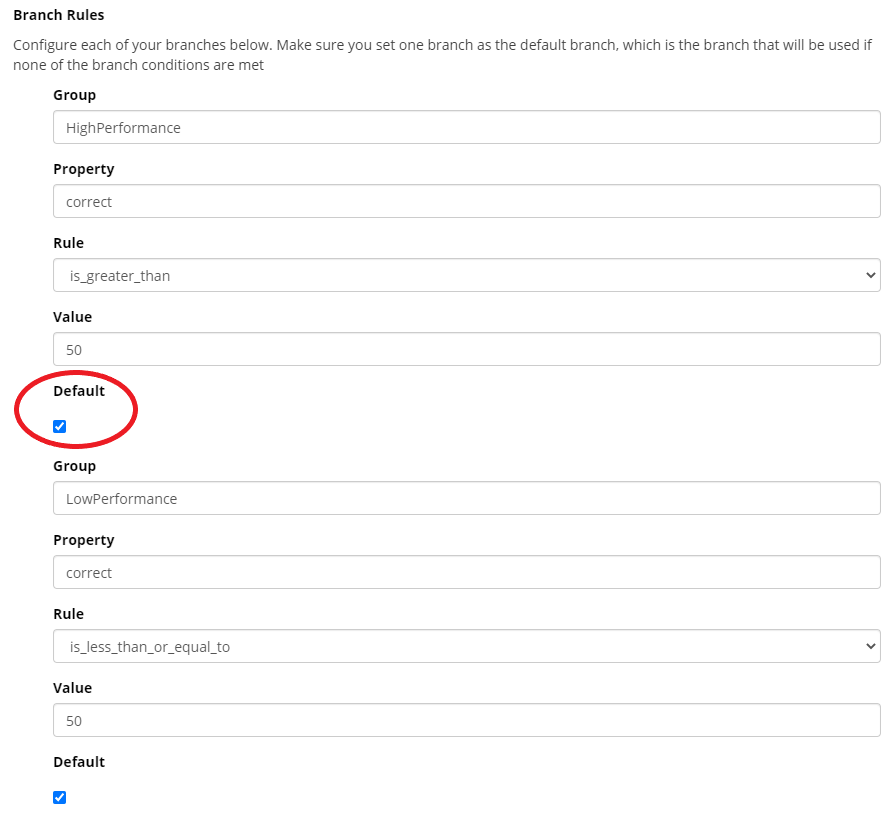

4. You have set more than one branch as default.

The image below shows a Branch Node set up incorrectly, with both branches selected as default.

To fix this, simply untick the Default box on one of the branches.

5. You have specified the wrong Value in your Branch Node.

The Value field in the Branch Node should relate to the data you use to satisfy the Rule of the branch. Say you are branching participants who fail an attention check to a Reject Node and your attention check involves one question, which the participant can either get correct or incorrect. There are a couple of different ways you could set this up in your task.

You could score their response and save the correct answer count as a numerical value, such as a 1 (the participant passed the attention check) or as a 0 (the participant failed). In the Branch node, you should put a 1 in the Value setting for the Pass group with the rule 'equals' and 0 as the Value for the Fail group, again with the rule 'equals'. You can see an example of this in the Performance Branching Tutorial.

Alternatively, you could save the exact answer that the participant gave, without directly scoring it as correct or incorrect in the task. The value of this data will either match the correct answer (if the participant passed the check), or it will not (if the participant failed). In this case, you should enter the correct answer as the Value for both Groups in your Branch Node, with the rule 'equals' for the Pass group and the rule 'not_equals' for the Fail group.

The important thing is to make sure your Value matches the type of data you are saving: a number if you are saving something you've scored (such as the correct answer count), or the actual answer if you are saving exactly what response the participant gave.

6. You have not updated all nodes in your Experiment Tree.

Setting up a Branch Node can be a multi-stage process, with a lot of edits to Tasks and Questionnaires along the way. In some cases, the process of saving data (as a Field in the Store, a Key, or embedded data) are set up perfectly in the latest versions of your tasks - you just haven't updated all nodes in your Experiment Tree to use the most current versions! Try updating your nodes and see if that fixes the problem.

If you've checked all the above and are still having issues with branching, feel free to contact us with a link to your experiment and we will happily take a look!

Here are some common reasons why data may not display correctly:

Here's what to do in each case:

1. The key/name you are trying to retrieve does not exist, or there is a typo in the name.

When retrieving data from the store or embedded data, the name must exactly match the field, spreadsheet column, or manipulation that you're trying to retrieve. Store and embedded data are both case sensitive.

2. You are not using the correct syntax.

In Task Builder 2 and Questionnaire Builder 2, you can retrieve data from the store using the following syntax:

${store:FieldName}

Where 'FieldName' is the name of the field that you've stored the data in. Our TB2 How-To guide has more information about retrieving data from the store.

In the older Task Builder 1 and Questionnaire Builder 1, the syntax was:

$${EmbeddedDataName}

Where 'EmbeddedDataName' should be the name of the embedded data (in TB1) or the name of the Key (QB1). Retrieving embedded data in TB1 and QB1 is explained further in our embedded data walkthrough.

3. Your store/embedded data values are accumulating over multiple/repeated versions of the same task.

If you are saving data like number of correct/incorrect answers, points awarded to participants etc, and your participants do the same task more than once in your experiment, the data will accumulate across all versions of the task unless you specify that you want to reset it. For example, if they get 8/10 trials correct in your task, but after a distractor task they repeat it and score 10/10, the score will show as 18 correct answers. The solution depends on exactly how the versions of your task are set up in the Experiment Tree.

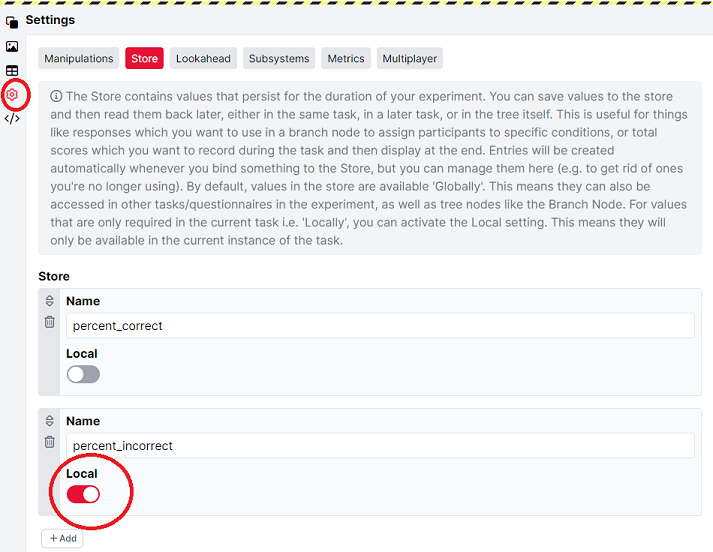

- If you don't intend to use the data elsewhere in the experiment or if you are using a Repeat node you can navigate to the Settings icon () on the left, then to the Store tab, and turn the Local toggle on. When on, the data become localised to the instance of the task which means it won't be available outside of it. If the task repeats later in the experiment in a separate node, the data from the previous iteration won't interfere with the data from the new one.

- If you need to use the data elsewhere in the experiment tree (such as in other tasks, or in a Branch node) you will need the above setting turned off. When the Local toggle is off, the data becomes globalised to the rest of your experiment and can be used in other tasks or, for example, Branch nodes. However, to reset the data the next time your participants enter your study you can simply use the Set Field on Start component at the beginning of the task, such as on an instructions screen. If you reset it to '0', it will start from scratch the next time participants do the task, but allow you to use the data elsewhere in your experiment tree until that point.

If you've checked all the above and are still having issues with data displaying incorrectly, contact us with a link to your experiment and we will happily take a look!

These are the most common issues related to viewing metrics that are reported:

There are two main reasons for this issue:

Here are the solutions for each case:

For those who have downloaded the data file prior to August 2018:

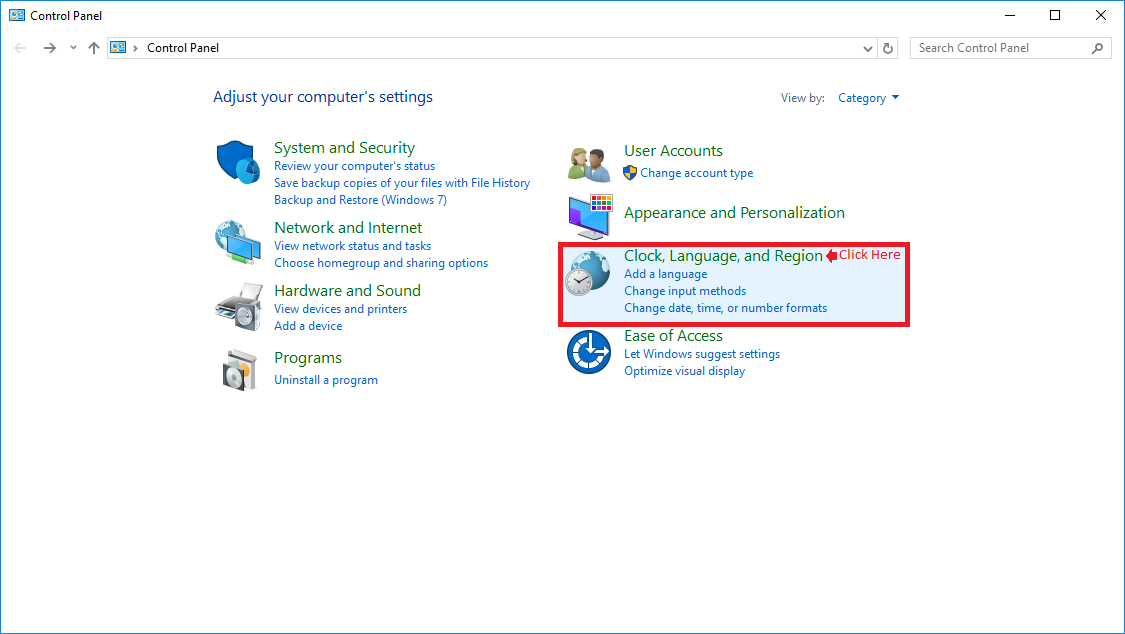

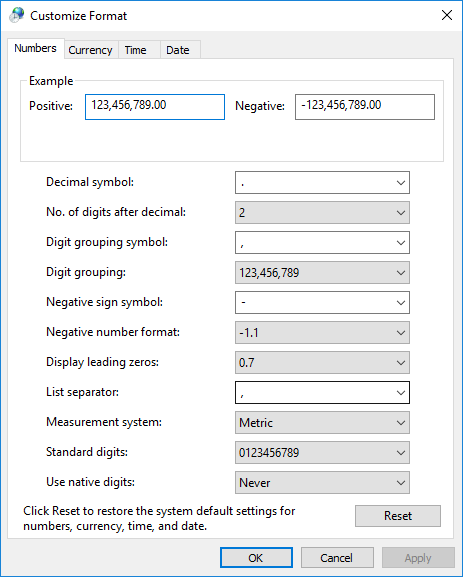

The solution for this issue is to change your computers local 'Delimiter' settings. Please refer to the instructions below, appropriate for your operating system.

Windows-7

Step 1: Click the Start button, and then click Control Panel

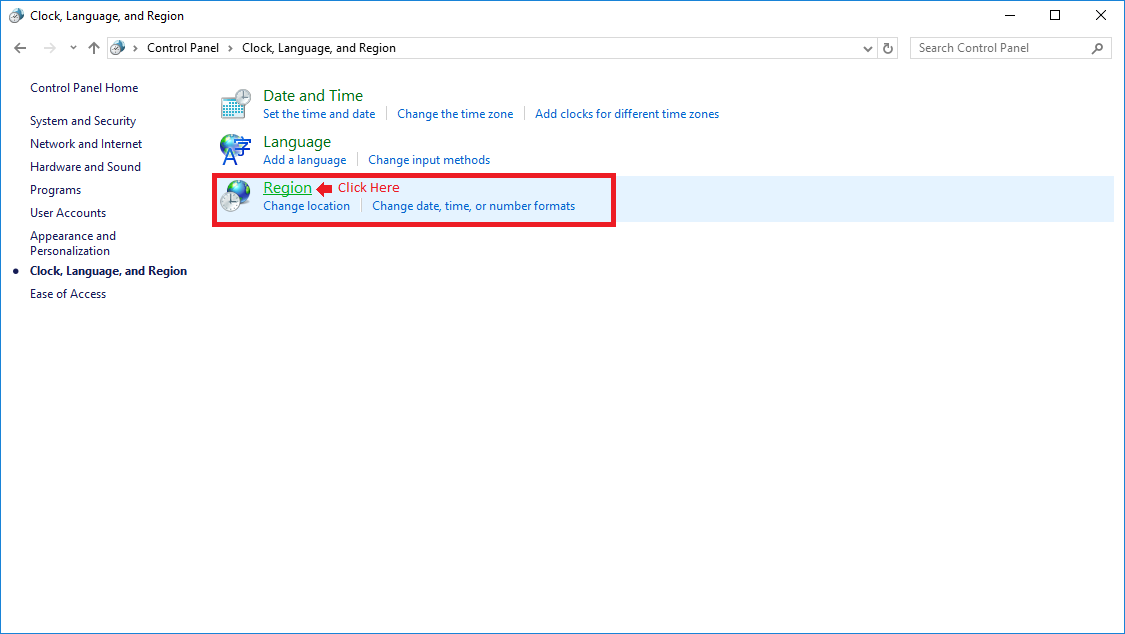

Step 2: Click on 'Clock, Language and Region' menu section.

Step 3: In this sub-menu, click 'Region' to display the Region 'dialog-box'

Step 4: Click Additional Settings button, to open the Customize Format 'dialog-box'.

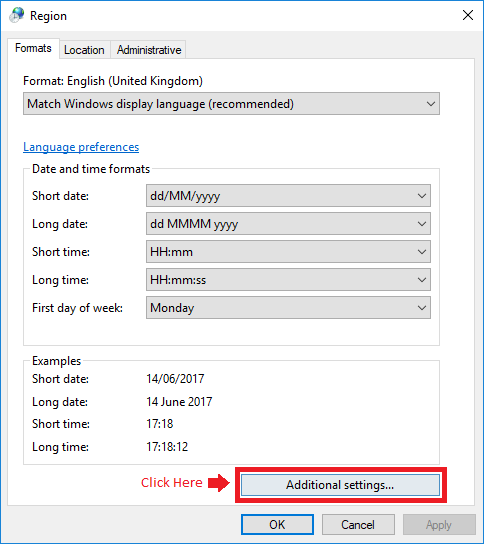

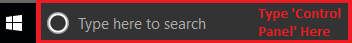

Windows-10

The instructions for windows 10 are identical to Windows-7 with one exception:

Step1: Locate the Control Panel by typing in 'Control Panel' in the Start Menu, search bar.

Sometimes metrics can appear in a different order between participants.

Explanation:

The most common occurrence of this issue is found in Questionnaire metrics.

Downloaded metrics are, among other things, ordered by UTC timestamp, which is the time the metric is received by our database. For Questionnaires, because all of the responses in a Questionnaire are collected and uploaded simultaneously, individual responses can sometimes arrive at the database at slightly different times. This can result in a participants responses appearing a different order to other participants. The same can occur for tasks, where metrics that are uploaded very close together can sometimes appear in a different order than would be expected.

Solution:

In both cases, there is no change necessary nor cause for concern.

If you wish you can reorder your metrics within Excel based on Local Timestamp (which is the time the metric was initially recorded on the respondents device) rather than UTC as this may mitigate the issue.

Looking at numerical data recorded from your experiment, the numbers may seem to be too big, too small, or contain multiple decimal points, making them difficult to interpret.

Explanation: This problem may occur because of regional variation in the conventions for decimal and thousands separators.

When numerical data such as reaction times are recorded in the browser, they are always encoded with the full stop/period (.) as the decimal separator and the comma (,) as the thousands separator. This is what will be uploaded to Gorilla's data stores. However, in many European countries, the roles of these separators are reversed - the comma is the decimal separator and the full stop is the thousands separator. As a result, when opening a data file expecting this encoding type, the numerical data may be parsed incorrectly.

Solution: To resolve this, you can take the following steps:

Alternatively, your spreadsheet program's advanced settings should include an option to manually specify the decimal and thousands separators.

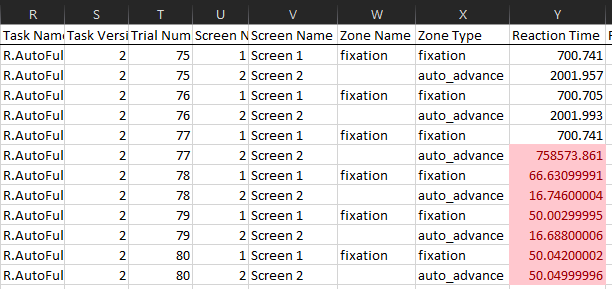

Sometimes participants' reaction times can appear much shorter/longer than you expect to be possible.

Explanation:

In order to accurately measure and record a participants reaction times Gorilla must be the active window, at all times, while the participant is undertaking your Task.

If the participant navigates away from Gorilla during your task - by switching to a different tab/browser or opening and using a different programme - this can lead to inaccurate recording of their reaction times.

This behaviour will be clearly discernible to you in your metrics as a distinct set of reaction times: typically a reaction time much longer than usual followed by a series of shorter reaction times (See the image example below).

Participant behaviour such as this is an example of 'divided attention'. It indicates that the participant is 'distracted' and is not paying full attention to your Task. As such you will probably want to take this into account in your analysis by plotting and/or inspecting your RTs or else redesign your task to mitigate such behaviour - see our suggestions below.

You will likely only encounter this behaviour if your task has lots of time-limited screens back-to-back. i.e. Screens which are set to auto-advance the participant after a set time and which do not require participant input/response in order to advance.

Example of typical 'distracted participant' reaction time pattern:

Solution:

While you cannot force participants to stay focused on your task there are improvements

and changes you can implement in your tasks to reduce the likelihood of such participant behaviour.

Here are some suggestions:

There are a couple of reasons why it can look like you have missing data:

1) Have you checked to make sure all the necessary participants are 'included'?

When a participant is 'included' their data is added to your available metrics download. A participant is included automatically when they reach a finish node and Complete your experiment. However, by default, participants who are still 'Live' i.e. still working through your experiment, are not included and their data won't appear in your download. If you want to include the data from participants who are still live, go to the Participants tab on your experiment, click the 'Actions' button on a participant and select 'Include.' Alternatively, you can use the 'Force Include All' option to include everyone. Note for Pay-per-Participant users: remember that including a participant consumes a participant token and this process is irreversible. Make sure you only include participants you really want the data for and purchase more tokens if you need to.

2) Are you looking at the right version of your experiments data?

Participant data is associated with a version of an experiment. If you made any changes to your experiment during data collection, your data will be split across the different versions of your experiment. For example, your overall participant count may be 40 but 20 of them were collected in version 2 of your experiment and another 20 were in version 3. To gather the data for all fourty participants, you would need to download the data from both version 2 and version 3. To change the version, you are currently downloading from, on the Data tab, select the appropriate version from the Version Picker. To see what versions of your experiment your participants saw, go to the Participants tab and review the contents of the Version column.

3) Are you sure the participant completed all parts of your experiment?

Not every participant will complete the whole of your experiment! At any time, a participant has the right to withdraw and you will receive no notification of this, other than a sudden stop in the metrics and the participant status remaining 'Live'. If a participant’s data seems to stop part way through a task, check to see if data from that participant appears in any later stages of your experiment. Try to find that participants unique private or publicID in later questionnaires/tasks. If you can't find them there then they most likely decided to leave! All experiments will experience some form of attrition and there is little that can be done to prevent this, unfortunately.

4) Did you use any Experiment Requirement settings?

If you choose to restrict participation in your experiment via any of the requirements set on your experiments recruitment page. i.e. Restrictions based on Device Types, Browser Types, Location or Connection Speed. Participants who enter your experiment but whom fail to meet your specified requirements will not see any of your experiment and will therefore have no metrics recorded.

If you wish to calculate the number of participants being rejected via your selected experiment requirements use a checkpoint node directly after the start node(s) of your experiment. For pay-per-participant accounts holders, we recommend using this method if you wish to determine which 'live' participants you may wish to reject and which you wish to include.

5) Have you tried all the solutions described above?

If you have reviewed and tested all of the solutions listed on this page and they have not resolved your issue, contact us via our contact form and we'll look into it for you as a matter of priority.

In the vast majority of cases, data generation in Gorilla is very fast, taking only a matter of minutes. However, in some circumstances, data generation can take longer.

Explanation: At any given time, Gorilla is handling a lot of requests for data. At particularly busy times, a queue can form, leading to longer-than-expected wait times before your data are ready to download.

Solution: If it's been 24 hours and your data have still not generated, contact us and we'll look into it for you!

As of October 2017, improvements to the system for recording metrics means that duplicates are no longer loaded into your results. You should no longer see duplicate metrics appearing in your data download for participant data gathered from October 2017 onwards. Note that we still carry out the safety check described below to make sure that you collected data is stored successfully.

If you believe you are still experiencing this problem please get in touch with us via our contact form.

When the browser is uploading metrics to the server it expects to receive a message back saying the metric was uploaded successfully. If it doesn't receive this message, it will retry after a timeout as a failsafe. Sometimes, the server is just being a bit slow or the participants internet connection is unreliable, and so while the first metric did get stored just fine, the browser thinks that it might not have gotten there, and so tries again. This then results in two entries of the same metric. In these cases, we think it's most scientifically appropriate to just disregard the later values.

The participant hasn't see the trial twice, it is simply that the metrics have been uploaded to the server twice. The metrics are identical, so just delete one of the rows.

As of October 2017, a rare edge case was found where a participant with a poor internet connection could refresh on the final screen of a task, while loading the next part of the experiment, and receive the final screen again. This edge case has now been resolved and should no longer occur.

As of August 2017 we have improved how Gorilla records a participant's progress through a task. As a result it should no longer be possible for participants to see a trial twice; due to their having lost connection (e.g. poor internet connection) with the server. As a result the metrics data should not contain repeated trial metrics.

If you believe you have found repeated trials in your data where you are not expecting them, please get in touch with us via our contact form.

For Data collected before August 2017:

If a participant's connection fails during the experiment, they can fail to synchronise their current progress through the task. When they then refresh the page, they go back to the last

point at which their progress was synchronised, which may be earlier in the task.

Typically, at the start of the last trial.

This can lead to some trials appearing twice or metrics appearing to be out-of-order.

In this situation the participant has seen the trial twice, because the participants connection failed, their progress wasn't saved to the server as the server has no way to know where the participant was. In these cases, we think it is best to use the responses from the first exposure to the trial.

Help for Task Builder 1 and the Code Editor:

Help for Task Builder 2

If your participant receives a 'Please switch to landscape mode' message when completing your task on mobile and this doesn't go away when the phone is rotated, there are two common explanations.

Your participant has auto-rotate locked on their phone. This means the phone, and therefore Gorilla, will not switch to landscape mode when the participant turns their phone. To continue with the task, your participant needs to turn this off. We recommend that when recruiting via mobile, you ask participants to turn auto-rotate lock off before they begin the task.

Your participant has opened the experiment link through an app, such as Facebook or Instagram. This may open the link inside the app rather than within a full browser, and this may not be able to pick up on phone rotation. We strongly recommend that when recruiting via mobile, you ask participants to copy-paste the link into their browser.

Some components in the tooling are labelled with '(Beta)'. This means that the components have been released for public use, but that we intend to make improvements to them before they are considered ‘finished’. We cannot guarantee that they will work without fault, and suggest that these be used in addition to our other components, rather than instead of.

If you find any issues or bugs, please provide us with feedback as soon as possible via our contact form.

In the first instance, try using Gorilla in Chrome. We are committed to supporting all browsers, but some bugs may get through our testing.

If you find a bug (which then doesn't happen in Chrome), we would be immensely grateful if you could fill out the Support contact form with details of the bug and what browser you were using as this will help us address the issue! We won't be able to fix it right away, so use Chrome, but it will get it fixed as soon as possible.

You're viewing the support pages for our Legacy Tooling and, as such, the information may be outdated. Now is a great time to check out our new and improved tooling, and make the move to Questionnaire Builder 2 and Task Builder 2! Our updated onboarding workshop (live or on-demand) is a good place to start.

This is often because questionnaire widgets have the same key. If this is the case, an error message, like the one below, will be displayed.

Error Message

'Two of your nodes have the same key: response-2'Each widget in the questionnaire must have a unique key. Gorilla will autogenerate a unique key when you add a new widget (response-1, response-2, ... response-n), but you can also rename them to be more meaningful (e.g. anxiety-q1, depression-q1).

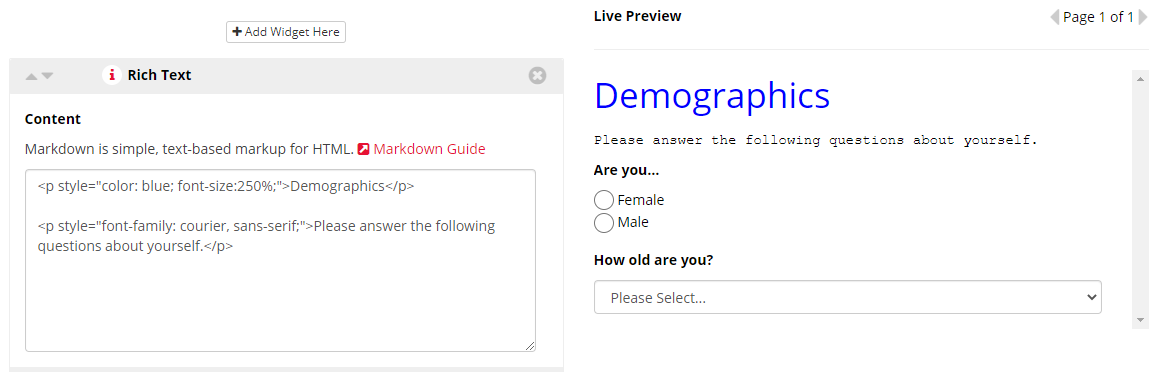

You can change the font size, style and colour of your text using HTML applied to each word/phrase.

In the example below, I have changed the colour and enlarged the Demographics heading, as well as changed the font style of the instructions using HTML formatting.

You're viewing the support pages for our Legacy Tooling and, as such, the information may be outdated. Now is a great time to check out our new and improved tooling, and make the move to Questionnaire Builder 2 and Task Builder 2! Our updated onboarding workshop (live or on-demand) is a good place to start.

Visit the dedicated TB1 troubleshooting page for more in-depth advice.

Beta Zones are new zones that have been released for public use, but that we intend to make improvements to before they are considered ‘finished’. We cannot guarantee that they will work without fault, and suggest that these be used in addition to our other zones, rather than instead of.

In most cases, the version of that zone that has been released in Task Builder 2 will be more stable and have better functionality.

Closed-Beta zones in TB1 are available ‘on request’ only. These zones are an early implementation of the feature, and have not been thoroughly tested on across all browsers. Access is given on the understanding that you will provide us with feedback on any bugs as soon as possible. Please also let us know if there’s something you’d like to be added to the zone.

Closed-Beta Zones may have limited support information available, so along with use of the Closed Beta Zone, you will be given access to a project containing a quick tutorial or example of the zone so you can see how this feature is set up, and, if desired, clone the example for your own use.

If you would like access to a closed beta zone, you should use our contact form and pick 'Beta Feature Access' from the dropdown menu.